The Mental Image Revealed by Gaze Tracking

Xi Wang,

Andreas Ley,

Sebastian Koch,

David Lindlbauer,

James Hays,

Kenneth Holmqvist,

Marc Alexa.

Published at

CHI

2019

Abstract

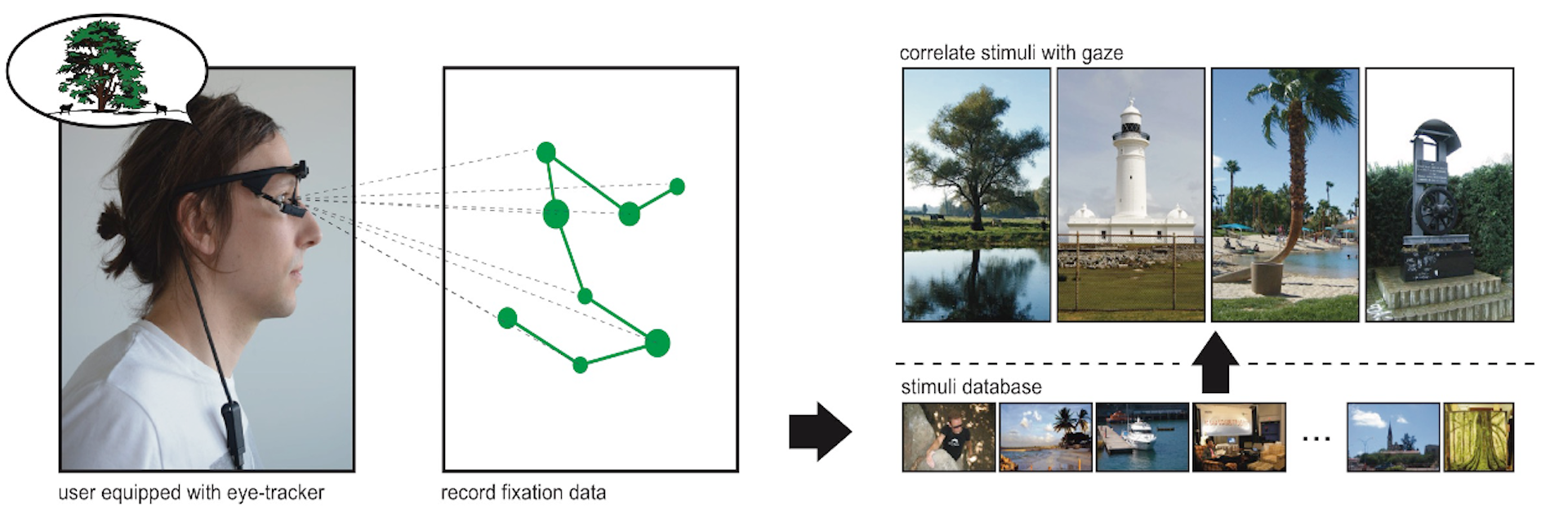

Humans involuntarily move their eyes when retrieving an image from memory. This motion is often similar to actually observing the image. We suggest to exploit this behavior as a new modality in human computer interaction, using the motion of the eyes as a descriptor of the image. Interaction requires the user's eyes to be tracked but no voluntary physical activity. We perform a controlled experiment and develop matching techniques using machine learning to investigate if images can be discriminated based on the gaze patterns recorded while users merely think about image. Our results indicate that image retrieval is possible with an accuracy significantly above chance. We also show that this result generalizes to images not used during training of the classifier and extends to uncontrolled settings in a realistic scenario.

Materials

Bibtex

@inproceedings{Wang19

author = {Wang, Xi and Ley, Andreas and Koch, Sebastian and Lindlbauer, David and Hays, James and Holmqvist, Kenneth and Alexa, Marc},

title = {The Mental Image Revealed by Gaze Tracking},

year = {2019},

isbn = {9781450359702},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3290605.3300839},

doi = {10.1145/3290605.3300839},

booktitle = {Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages = {1–12},

numpages = {12},

keywords = {gaze pattern, eye tracking, mental imagery},

location = {Glasgow, Scotland Uk},

series = {CHI '19}

}