User Preference for Navigation Instructions in Mixed Reality

Jaewook Lee,

Fanjie Jin,

Younsoo Kim,

David Lindlbauer.

Published at

IEEE VR

2022

Abstract

Current solutions for providing navigation instructions to users who are walking are mostly limited to 2D maps on smartphones and voice-based instructions.

Mixed Reality (MR) holds the promise of integrating navigation instructions directly in users' visual field, potentially making them less obtrusive and more expressive.

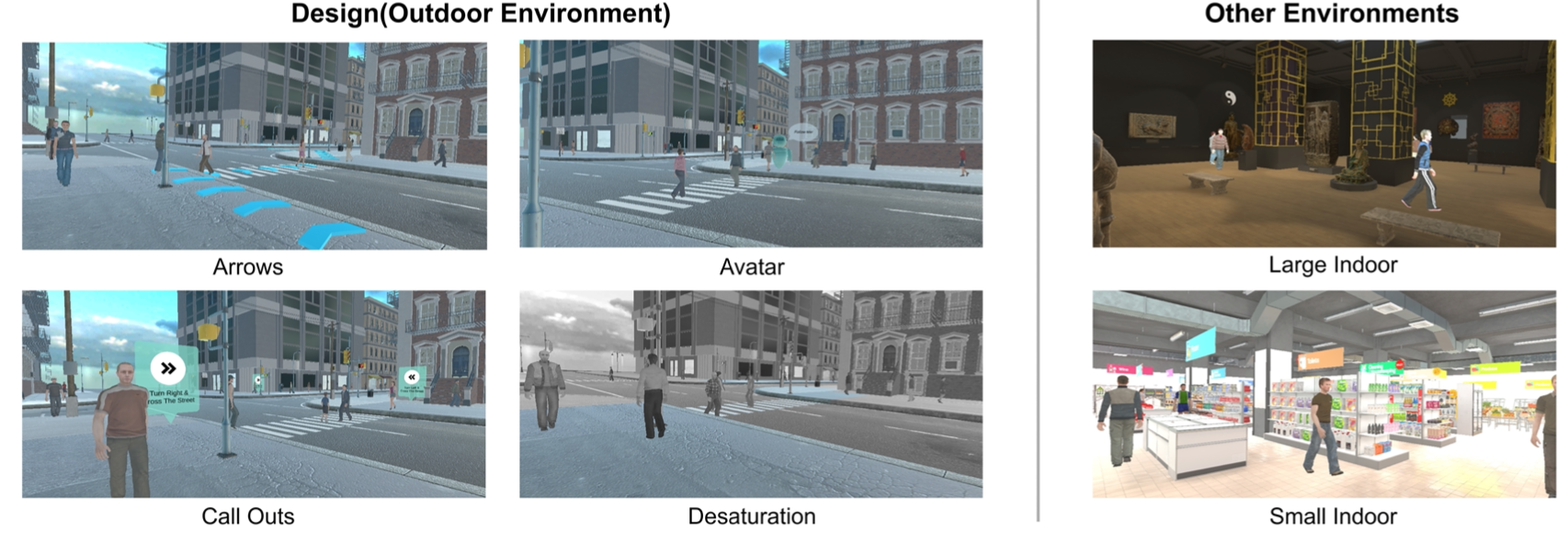

Current MR navigation systems, however, largely focus on using conventional designs such as arrows, and do not fully leverage the technological possibilities.

While MR could present users with more sophisticated navigation visualizations, such as in-situ virtual signage, or visually modifying the physical world to highlight a target, it is unclear how such interventions would be perceived by users.

We conducted two experiments to evaluate a set of navigation instructions and the impact of different contexts such as environment or task.

In a remote survey (n = 50), we collected preference data with ten different designs in twelve different scenarios.

Results indicate that while familiar designs such as arrows are well-rated, methods such as avatars or desaturation of non-target areas are viable alternatives.

We confirmed and expanded our findings in an in-person virtual reality (VR) study (n = 16), comparing the highest-ranked designs from the initial study.

Our findings serve as guidelines for MR content creators, and future MR navigation systems that can automatically choose the most appropriate navigation visualization based on users' contexts.

Materials

Bibtex

@inproceedings {Lee2022,

author = {Lee, Jaewook and Jin, Fanjie and Kim, Younsoo and Lindlbauer, David},

title = {User Preference for Navigation Instructions in Mixed Reality},

year = {2022},

publisher = {IEEE},

keywords = {Mixed Reality, Navigation, Adaptive user interfaces},

location = {Virtual Event, New Zealand},

series = {IEEE VR '2022}

}