First or Third-Person Hearing? A Controlled Evaluation of Auditory Perspective on Embodiment and Sound Localization Performance

Yi Fei Cheng,

Laurie Heller,

Stacey Cho,

David Lindlbauer.

Published at

IEEE ISMAR

2024

Abstract

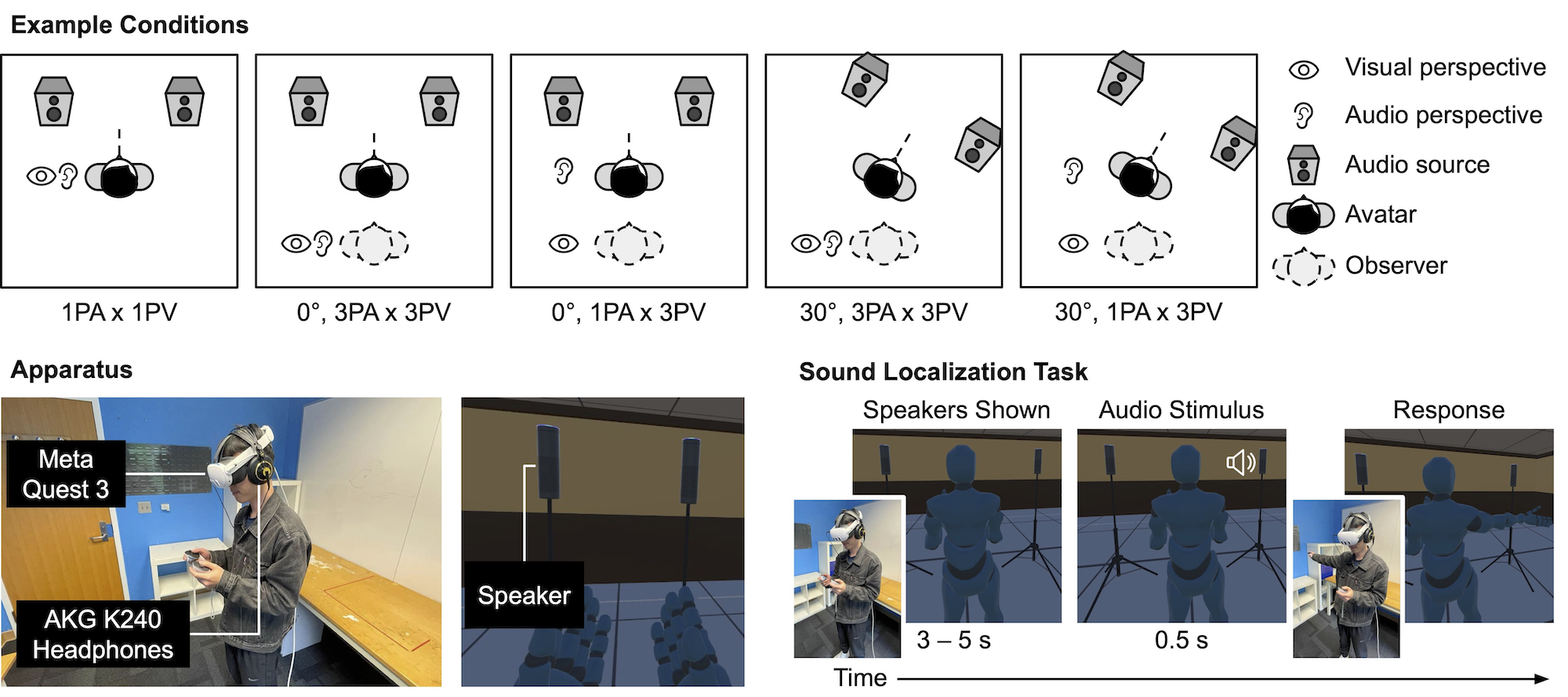

Virtual Reality (VR) allows users to flexibly choose the perspective through which they interact with a synthetic environment. Users can either adopt a first-person perspective, in which they see through the eyes of their virtual avatar, or a third-person perspective, in which their viewpoint is detached from the virtual avatar. Prior research has shown that the visual perspective affects different interactions and influences core experiential factors, such as the user's sense of embodiment. However, there is limited understanding of how auditory perspective mediates user experience in immersive virtual environments. In this paper, we conducted a controlled experiment ($N=24$) on the effect of the user's auditory perspective on their performance in a sound localization task and their sense of embodiment. Our results showed that when viewing a virtual avatar from a third-person visual perspective, adopting the auditory perspective of the avatar may increase agency and self-avatar merging, even when controlling for variations in task difficulty caused by shifts in auditory perspective. Additionally, our findings suggest that differences in auditory perspective generally have a smaller effect than differences in visual perspective. We discuss the implications of our empirical investigation of audio perspective for designing embodied auditory experiences in VR.

Materials

Bibtex

@inproceedings {Cheng2024Hearing,

author = {Cheng, Yi Fei and Heller, Laurie and Cho, Stacey and Lindlbauer, David},

title = {First or Third-Person Hearing? A Controlled Evaluation of Auditory Perspective on Embodiment and Sound Localization Performance},

year = {2024},

publisher = {IEEE},

doi = {10.1109/ISMAR62088.2024.00022},

keywords = {Virtual Reality, auditory perception},

location = {Seattle, WA, USA},

series = {ISMAR '24}

}