Auptimize: Optimal Placement of Spatial Audio Cues for Extended Reality

Hyunsung Cho,

Alexander Wang,

Divya Kartik,

Emily Liying Xie,

Yukang Yan,

David Lindlbauer.

Published at

ACM UIST

2024

Abstract

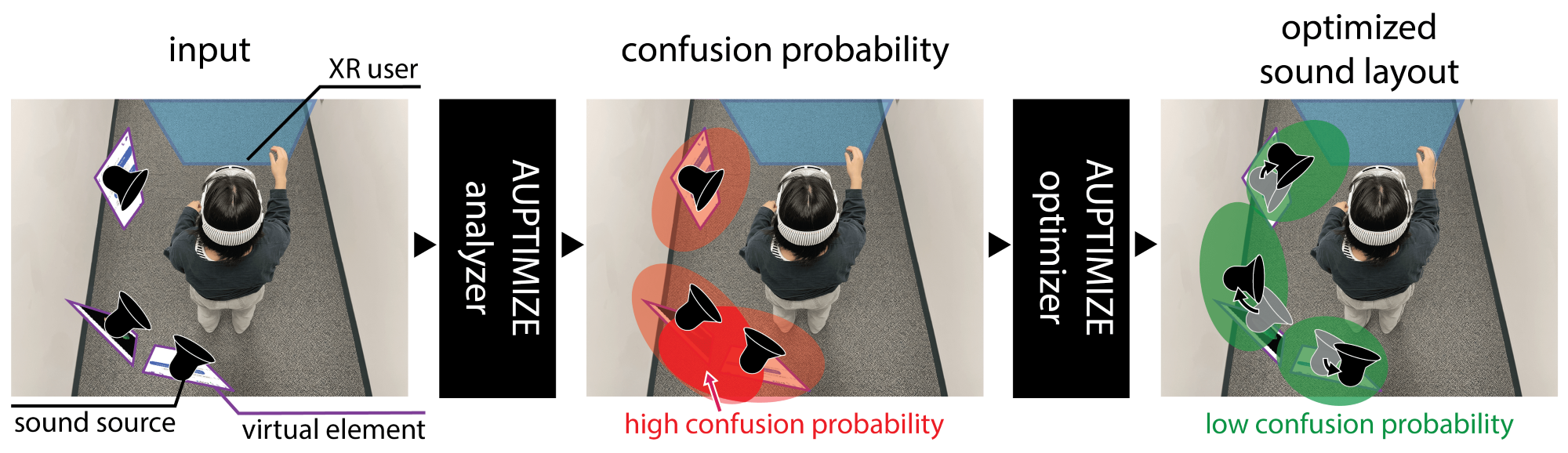

Spatial audio in Extended Reality (XR) provides users with better awareness of where virtual elements are placed, and efficiently guides them to events such as notifications, system alerts from different windows, or approaching avatars. Humans, however, are inaccurate in localizing sound cues, especially with multiple sources due to limitations in human auditory perception such as angular discrimination error and front-back confusion. This decreases the efficiency of XR interfaces because users misidentify from which XR element a sound is coming. To address this, we propose Auptimize, a novel computational approach for placing XR sound sources, which mitigates such localization errors by utilizing the ventriloquist effect. Auptimize disentangles the sound source locations from the visual elements and relocates the sound sources to optimal positions for unambiguous identification of sound cues, avoiding errors due to inter-source proximity and front-back confusion. Our evaluation shows that Auptimize decreases spatial audio-based source identification errors compared to playing sound cues at the paired visual-sound locations. We demonstrate the applicability of Auptimize for diverse spatial audio-based interactive XR scenarios.

Materials

Bibtex

@inproceedings {Cho2024Auptimize,

author = {Cho, Hyunsung and Wang, Alexander and Kartik, Divya and Xie, Emily Liying and Yan, Yukang and Lindlbauer, David},

title = {Auptimize: Optimal Placement of Spatial Audio Cues for Extended Reality},

year = {2024},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

keywords = {Audio perception, Computational Interaction, Extended Reality},

location = {Pittsburgh, PA, USA},

doi = {10.1145/3654777.3676424},

series = {UIST '24}

}