Predicting the Noticeability of Dynamic Interface Elements in Virtual Reality

Zhipeng Li,

Yi Fei Cheng,

Yukang Yan,

David Lindlbauer.

Published at

ACM CHI

2024

Abstract

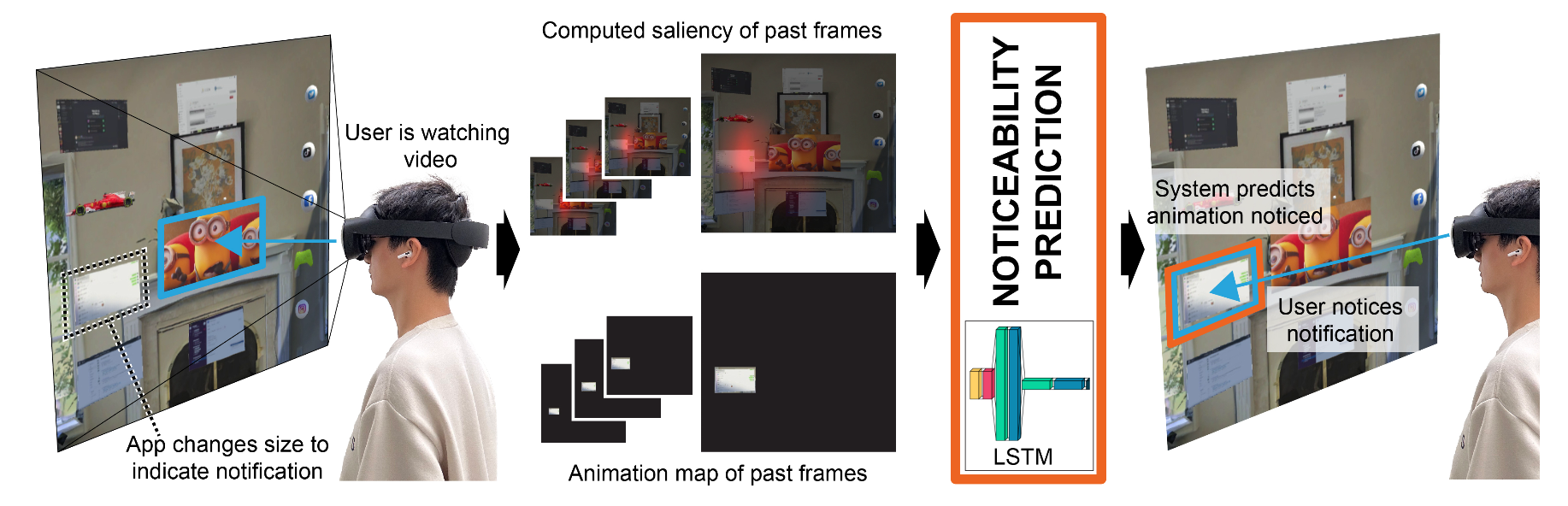

While Virtual Reality (VR) systems can present virtual elements such as notifications anywhere, designing them so they are not missed by or distracting to users is highly challenging for content creators. To address this challenge, we introduce a novel approach to predict the noticeability of virtual elements. It computes the visual saliency distribution of what users see, and analyzes the temporal changes of the distribution with respect to the dynamic virtual elements that are animated. The computed features serve as input for a long short-term memory (LSTM) model that predicts whether a virtual element will be noticed. Our approach is based on data collected from 24 users in different VR environments performing tasks such as watching a video or typing. We evaluate our approach (n = 12), and show that it can predict the timing of when users notice a change to a virtual element within 2.56 sec compared to a ground truth, and demonstrate the versatility of our approach with a set of applications. We believe that our predictive approach opens the path for computational design tools that assist VR content creators in creating interfaces that automatically adapt virtual elements based on noticeability.

More information

Source code and models available at https://github.com/ZhipengLi-98/Predicting-Noticeability-in-VR

Materials

Bibtex

@inproceedings {Li24,

author = {Li, Zhipeng and Cheng, Yi Fei and Yan, Yukang and Lindlbauer, David},

title = {Predicting the Noticeability of Dynamic Interface Elements in Virtual Reality},

year = {2024},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3613904.3642399},

doi = {10.1145/3613904.3642399},

keywords = {Virtual Reality, Mixed Reality, Computational Interaction},

location = {Honolulu, HI, USA},

series = {CHI '24}

}