Exploring AR hand augmentations as error feedback mechanisms for enhancing gesture-based tutorials

Catarina G. Fidalgo,

Yukang Yan,

Mouricio Sousa,

Joaquim Jorge,

David Lindlbauer.

Published at

Frontiers in Virtual Reality

2025

Abstract

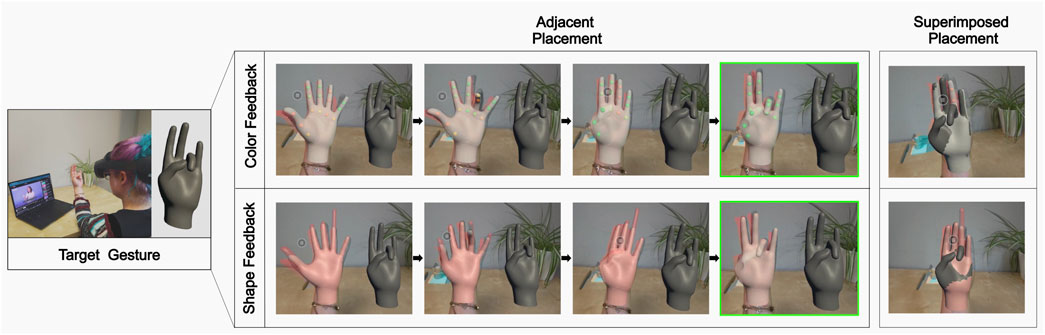

Self-guided tutorials from videos help users learn new skills and complete tasks with varying complexity, from repairing a gadget to learning how to play an instrument. However, users may struggle to interpret 3D movements and gestures from 2D representations due to different viewpoints, occlusions, and depth perception. Augmented Reality (AR) can alleviate this challenge by enabling users to view complex instructions in their 3D space. However, most approaches only provide feedback if a live expert is present and do not consider self-guided tutorials. Our work explores virtual hand augmentations as automatic feedback mechanisms to enhance self-guided, gesture-based AR tutorials. We evaluated different error feedback designs and hand placement strategies on speed, accuracy and preference in a user study with 18 participants. Specifically, we investigate two visual feedback styles — color feedback, which changes the color of the hands’ joints to signal pose correctness, and shape feedback, which exaggerates fingers length to guide correction — as well as two placement strategies: superimposed, where the feedback hand overlaps the user’s own, and adjacent, where it appears beside the user’s hand. Results show significantly faster replication time when users are provided with color or baseline no explicit feedback, when compared to shape manipulation feedback. Furthermore, despite users’ preferences for adjacent placement for the feedback representation, superimposed placement significantly reduces replication time. We found no effects on accuracy for short-time recall, suggesting that while these factors may influence task efficiency, they may not strongly affect overall task proficiency.

Materials

Bibtex

@article {Fidalgo2025-dh,

author = {Fidalgo, Catarina G and Yan, Yukang and Sousa, Mauricio and Jorge, Joaquim and Lindlbauer, David},

title = {Exploring {AR} hand augmentations as error feedback mechanisms for enhancing gesture-based tutorials},

year = {2025},

volume = {6},

journal = {Frontiers in virtual reality},

address = {New York, NY, USA},

keywords = {ugmented reality; training; tutorials; hand gestures; error feedback; virtual hand augmentations},

doi = {10.3389/frvir.2025.1574965},

series = {CHI '25}

}