Simulating Human Audiovisual Search Behavior

Hyunsung Cho,

Xuejing Luo,

Byungjoo Lee,

David Lindlbauer,

Antti Oulasvirta.

Published at

ACM CHI

2026

Abstract

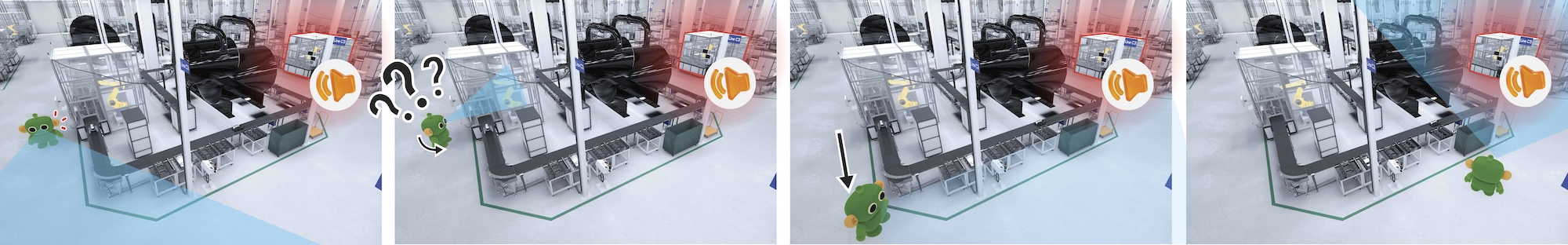

Locating a target based on auditory and visual cues---such as finding a car in a crowded parking lot or identifying a speaker in a virtual meeting---requires balancing effort, time, and accuracy under uncertainty. Existing models of audiovisual search often treat perception and action in isolation, overlooking how people adaptively coordinate movement and sensory strategies. We present Sensonaut, a computational model of embodied audiovisual search. The core assumption is that people deploy their body and sensory systems in ways they believe will most efficiently improve their chances of locating a target, trading off time and effort under perceptual constraints. Our model formulates this as a resource-rational decision-making problem under partial observability. We validate the model against newly collected human data, showing that it reproduces both adaptive scaling of search time and effort under task complexity, occlusion, and distraction, and characteristic human errors. Our simulation of human-like resource-rational search informs the design of audiovisual interfaces that minimize search cost and cognitive load.

Materials

Bibtex

@inproceedings {Cho2026Sensonaut,

author = {Cho, Hyunsung and Luo, Xuejing and Lee, Byungjoo and Lindlbauer, David and Oulasvirta, Antti},

title = {Simulating Human Audiovisual Search Behavior},

year = {2026},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

doi = {10.1145/3772318.3790614},keywords = {Computational behavior modeling; user simulation; multimodal perception; computational rationality; reinforcement learning},

location = {Barcelona, Spain},

series = {CHI '26}

}